ATLAS e-News

23 February 2011

M-Run on the TRT

23 January 2008

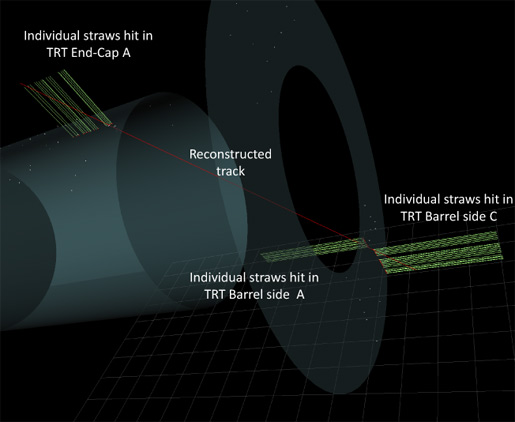

A cosmic ray traveling through the TRT crossed the top part of the A-side

endcap and the bottom part of the barrel (near z=0).

A VP1 event display showing all the TRT hits (white dots) projected on a

surface perpendicular to the unconstrained coordinate: a disk in x-y for

the Barrel hits and a cylinder at the innermost radius of the TRT for

the Endcap hits. The hits that are associated with the reconstructed

track (red line) are shown as full straws (green lines) to illustrate

the position of the track along the straw.

A cosmic ray traveling through the TRT crossed the top part of the A-side

endcap and the bottom part of the barrel (near z=0).

A VP1 event display showing all the TRT hits (white dots) projected on a

surface perpendicular to the unconstrained coordinate: a disk in x-y for

the Barrel hits and a cylinder at the innermost radius of the TRT for

the Endcap hits. The hits that are associated with the reconstructed

track (red line) are shown as full straws (green lines) to illustrate

the position of the track along the straw.

In one of the last working weeks of 2007, the ATLAS TRT took the opportunity to run a full slice of the data acquisition chain for five days. The goals were very similar to the ATLAS milestone weeks: exercise as much of the full system as was available; try to discover and fix as many problems as possible; and attempt to record some useful cosmic ray data. The TRT missed the previous milestone week (M5) due to other activities, so there was the additional motivation of not allowing too much time to pass between milestone weeks that include the TRT.

In M4, the TRT readout was limited to 28% of the barrel (roughly 30k readout channels). For the December run period, the readout was expanded to 40% of the barrel, plus more than one-third of the A-side endcap.

The addition of the endcap was an important part of the run, as it gave the offline experts a chance to see if their software could reconstruct tracks in the endcap alone, as well as tracks which pass through both the endcap and the barrel.

In the TRT, the barrel straws are arranged axially while the endcap straws are radial - so while all of the readout channels are inherently two-dimensional, the coordinate system transformations at the boundary make barrel+endcap track reconstruction an interesting problem. Simulated tracks in this region have long since been conquered; but, with real data, one just never knows what to expect.

In addition to the TRT, the Pixels also participated in the run period. While the Pixel detector was not fully connected and prepared for the end of December, a series of simulated events were generated by their readout electronics and fed through the DAQ chain. The inclusion of the Pixels in this week marked the beginning of a successful collaboration between the TRT and Pixel readout teams.

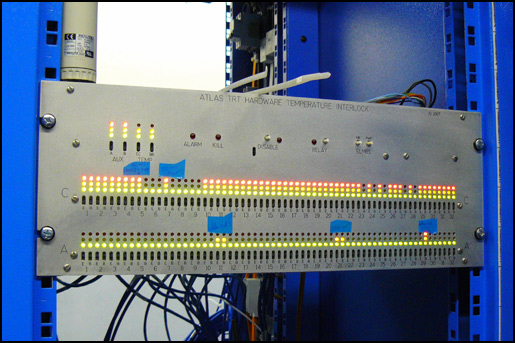

Another important step was the integration of the TRT hardware temperature interlock into the DCS framework. It monitors the temperature of the front-end electronics, and stands ready to cut power to the Maraton low-voltage power supply racks in case of an alarm condition. While the kill mode was not yet enabled, the December run served as a first test of the interlock's functionality and stability.

The "Logic Box" of the TRT hardware interlock in USA15. The TRT hardware temperature interlock protects against over-temperature conditions on the front-end electronics. The interlock monitors almost 2000 temperature sensors in the cryostat, and can disable power to the electronics when a critical threshold has been reached.

The run period started on Monday morning with the integration of the subsystem software and databases into the TDAQ partition. After a few hours of integration and debugging, the testing program was ready to start. The evening/overnight shifts were then dedicated to cosmic ray running, while the day shifts were used to do readout tests.

For the collection of cosmic ray data, the Tile Calorimeter group offered to operate their cosmics trigger every night - an important and appreciated part of the program. In addition, four scintillators on top of ATLAS were installed in December to provide a precisely timed coincidence counter pointing towards the inner detector. The Tile Calorimeter trigger rate was close to 1Hz, and the scintillator trigger had a rate of a few tenths of a Hertz. The combination of the two provided over 350k recorded triggers during the course of the week, which have yielded over 15k reconstructed tracks.

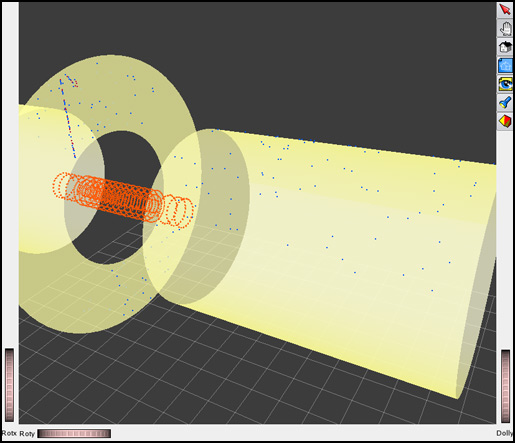

The day-shift program included several tests at high-rate, checks of synchronization between the TRT and the TDAQ systems, and tests of the ID-specific high-level trigger algorithms. The Level 2 track trigger algorithm had an efficiency close to that of the offline reconstruction, and provided data streams for events with reconstructed tracks as well as for tracks which point to the Pixel volume.

The Level 2 algorithms reconstructed cosmic ray muon tracks in real time, and produced a data stream which contained tracks pointing towards the Pixel detector. While the Pixel readout here is simulated, eventually such algorithms can be used to provide alignment and calibration streams.

In parallel with these activities, the offline software team was refining its reconstruction algorithms, and the online monitoring group was debugging the TRT and ID AthenaPT-based online monitoring software. By the end of the week, the readout system was fully integrated with both the TRT and ID monitoring, and Tier-0 was running happily over raw data without any problems.

Looking ahead to M6 and beyond, there's still a very long list of things to be done. Going over that list at weekly meetings can make one wonder how it will all get finished. But a successful run period in December made coming back to work in January a whole lot easier, and it left the group excited and prepared for some extremely busy times ahead.

Peter CwetanskiIndiana University |

Mike HanceUniversity of Pennsylvania

|