ATLAS e-News

23 February 2011

Monte Carlo simulation: Mimicking the ATLAS response better and faster than ever

3 March 2008

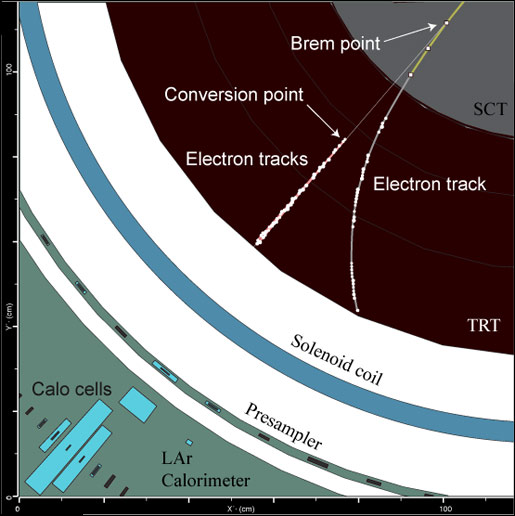

The new ATLFAST-II prototype with a fast inner detector simulation (above) after standard reconstruction has been run. Tracker hits and calorimeter energy cells are populated to mimic full simulation

The Atlas simulation group faces the daunting task of predicting the signals our detector will produce when it turns on later this year. Detector description groups have been at work modeling every piece, from the support feet to the individual layers of lead in the liquid argon barrel and endcap calorimeters. Another group of experts has worked to match the simulated detector response to the best data we have: the test beams. Despite the best efforts of all involved, the result is clear: at around 5 minutes per event on a modern processor, the full simulation is too slow to produce all the Monte Carlo we will need in the coming years.

Several versions of fast simulation have been developed to help deal with the problem. They are in various stages of validation but are ready for users to try out in their analyses. The Physics Validation Group has already helped to find any gross problems, but far larger studies are necessary to eliminate unexpected problems and to completely benchmark the physics performance of each. One user has already helped find a problem with ATLFAST-II: one bad jet for every few million simulated. It is now crucial that users assist in comparisons with physics variables so that we can completely understand and document the systematic errors associated with the simulations.

Having these tools available may prove vital during early running as well, particularly if (or when) the full simulation cannot completely reproduce the data. The only mechanisms currently in place to correct reconstructed Monte Carlo exist for the fast simulations. It may also take quite some time to understand and correct discrepancies between full simulation and data, since there are not as many obvious knobs to turn as there are in the fast simulations.

The Fast G4 Simulation group has been dedicated to finding ways to improve the CPU performance of the full simulation. For hadronic events, most simulation time is spent producing and showering photons and electrons in the famous Spanish fan of the endcap and accordion of the barrel electromagnetic calorimeters. By replacing low-energy electromagnetic particles with pre-simulated showers from a library, physics events can be accelerated by a factor of two to three with little adverse effect to analysis. The goal of this group is to provide a faster simulation that is good enough for almost all the normal full simulation analyses.

As the Fast G4 Group has tried to speed up the existing simulation, the ATLFAST-II Group has built a new fast simulation, component by component, which they have been painstakingly tuning to full simulation data. The well-known ATLFAST-I was extremely fast but only applied simple smearing of momenta and omitted many important detector effects. ATLFAST-II runs the standard reconstruction tools. It can, therefore, reproduce similar reconstruction efficiencies and fake rates and provide the same jet or electron objects in users’ analyses. Two flavors of ATLFAST-II are foreseen: one using the full inner detector simulation for b-tagging or inner detector studies, and one with a fast inner detector simulation, FATRAS, for higher statistics samples. Both flavors should be ideal for users hoping for considerably greater statistics without having to make unphysical cuts or adjustments in their analysis.

Over the last 6 months, the Simulation Optimization Group and Simulation Strategy Group have also been coping with the computing time issue. The Strategy Group, relying upon input from all the relevant groups, will produce a document in April containing their proposal for the 2008 simulation production. Some groups or samples may require our full simulation to give sufficiently accurate results. Other groups may need very high statistics or the ability to scan large parameter spaces. At the end of the day, we must find some fair way to dole out computing resources. Efforts are well underway to benchmark the effects of each simulation flavor on physics results and provide guidance for users when requesting samples.

With release 14, you can help finish the validation of these crucial simulation tools and ensure that your largest systematic error will not be Monte Carlo statistics!

.

Zachary Marshall

Columbia University