ATLAS e-News

23 February 2011

Trigger time!

14 June 2010

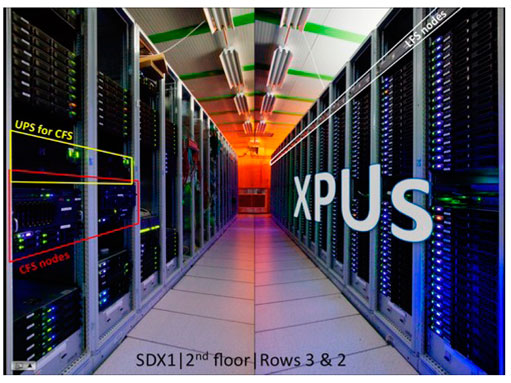

Racks of XPUs ( Interchangeable Processing Units) which run the HLT trigger algorithms

Even though the LHC is currently delivering five orders of magnitude below its design luminosity, peaking so far at 2 x 1029

cm-2

s-1

in ATLAS, already the collision rate that this provides the experiment is too high to be able to store it all. With the most recent jumps in luminosity, the time has come for the High Level Trigger system to step up and get seriously involved…

“There are several stages of turning on the trigger,” explains Wojtek Fedorko, who is involved with trigger operations, and affectionately describes the system as “a somewhat complicated beast.”

The Level 1 Trigger – the hardware system that bases its snap decisions on the

characteristics of energy clusters and energy sums in the calorimeter, or hits in the muon trigger system – has been active since the very first collisions at 900 GeV last year. It also has a number of direct inputs, including the MBTS and the forward detectors. Essentially every proton-proton collision is flagged by these detectors when charged particles from collisions hit them, and up until just a few weeks ago, every such 'minimum bias' trigger was accepted by the Level 1. At that time the calorimeter and muon triggers were a small subset of events being recorded.

As instantaneous luminosity increased, higher event rates made it impossible to write out each and every one to disk, so the minimum bias triggers were 'prescaled' such that only a known fraction of each type of event is now kept.

The prescale values are calculated for a given luminosity, based on known trigger rates from previous runs; each event type is accepted just once per n occurrences. The more common the event type, the greater the value of n. The prescales are tuned so that ATLAS is writing out data at just below the available bandwidth (a measure of how much data can be stored per second) and this scaling is then taken into account at the analysis stage.

The High Level Trigger (HLT) –which uses software at the Level 2 and Event Filter stages to wheedle out the more interesting events – has actually been running since day one of high-energy collisions, March 30th. But it wasn't run in 'physics rejection mode' until recently, and instead simply piped all events straight from Level 1 to the disk.

Overnight on Monday May 24th, the peak luminosity recorded by ATLAS almost tripled, and the HLT began rejecting electron triggers for the first time.

“The [electromagnetic trigger] thresholds at Level 1 are pretty low – we can accept at around 300 Hertz,” explains Wojtek, “but now that the triggers are firing above this frequency, we need to reject a fraction of them using more sophisticated algorithms at Level 2.”

“Of course the game becomes more complicated once you have more triggers in this regime,” he cautions. After the electromagnetic trigger rates rise, so too do other triggers, and distributing bandwidth gets trickier. “Essentially, it's solving a big equation, but an additional complication is that triggers are correlated.”

Here, the trigger team faces a choice: either prescale at Level 2 or activate the HLT algorithms. The former would guarantee that the HLT was not biasing the sample, but the latter would avoid throwing away interesting data.

“So what the electron trigger group decided to do was trust. They were reasonably confident in their triggers,” says Wojtek. To check whether or not that trust is well-placed, a small fraction of events are accepted without applying the triggers, so that bias studies can be pushed further.

“Based on data which have been taken during the last couple of months we were able to estimate the performance of our low energy electromagnetic triggers,” explains Rainer Stamen, deputy coordinator of the egamma signature group. “ So we were confident in the system before going into the rejection mode. Once we went into rejection everything behaved as expected. The fraction of rejected events followed our predictions and the data did not show any problem.”

On top of this, there is a continuous effort to monitor the quality of the triggers.

“Every run, we take a small portion of events and re-run them offline,” says Wojtek. “There we look and see if the baseline performance is ok – do the algorithms take too much time, do they crash? And also, do they make sense?”

From an operational point of view, things have been working very well thus far. Which is good news, because it's only going to get more complicated for the Trigger from here on in.

“I would say this is a big boundary, but there are going to be a lot of smaller boundaries where we have to move up in thresholds,” Wojtek summarises. “It's very exciting and it's going to be getting more and more complicated and exciting as the luminosity goes up.”

Ceri PerkinsATLAS e-News

|