ATLAS e-News

23 February 2011

ATLAS upgrade gains intensity

24 March 2009

The impressive turnout for the inner tracker workshop held at Nikhef, Amsterdam, in November 2008.

Even though the LHC has not yet delivered collisions and ATLAS is still finalising preparations for data taking, there are plans being hatched for major improvements in both. The LHC intends to implement changes over the next 10 years or so, eventually allowing it to deliver ten times its original design luminosity. Some major detector parts of ATLAS will reach their end-of-life in this time, and others will need improvements to cope with the higher luminosity. These detector changes make up the ATLAS Upgrade programme.

Developing and building ATLAS took a long time: it is 17 years since the ATLAS Letter of Intent was written. So while it would be great to sit back and concentrate on the ATLAS physics programme, it is also vital that preparations are already under way for the Upgrade, to ensure continuity of ATLAS beyond 2018. A growing body of physicists, engineers and technicians has been working on it since about 2003.

The LHC expects to deliver collisions before the end of 2009. Initially these will be at low rate, but rising rapidly to higher intensities. After a few years, it will reach the nominal design luminosity of 1 x 1034 cm-2 s-1. It will not rest there though: in the LHC Phase-I Upgrade, a new Linac will allow more intense beam in the LHC; and new quadrupole magnets either side of the ATLAS cavern will allow tighter focussing of the beam. These should be ready for use in 2014, and together will allow the luminosity to reach 3 times nominal. Beyond that, the LHC team has plans for Phase-II LHC Upgrade, with various changes leading to 10 times the nominal luminosity by 2019, in a project known as the super-luminous LHC, or sLHC.

It was challenging enough to build ATLAS to cope with nominal luminosity, let alone at 10 times this. Depending on the final sLHC scheme, there will be up to 400 proton-proton scatterings per bunch crossing filling ATLAS with a high density of particles. There are at least two factors to help us here: we have more experience gained during designing and building the current detector; and technology has advanced since ATLAS was designed. We expect to handle ten times the luminosity for an extra investment of a little less than half the original component cost of ATLAS.

There are many reasons for wanting higher luminosity. If there is a standard model Higgs particle in Nature, it should be found rather soon at the LHC. But that is not the end of the task: we will want to measure the properties of this new particle – how it is created and how it decays – and compare these measurements to predictions from various models. Have we discovered a Standard Model Higgs, conforming to all predictions? Or does it deviate somewhere, hinting to physics beyond the Standard Model? These studies require precision measurements of some rare processes, for which much more data is needed than for the basic discovery. There are many similar examples – if we find something at the LHC, we will need more data to understand just what has been found.

Another advantage of a much bigger data set is in extending the discovery potential for new particles to higher masses. The probability that a quark or gluon constituent of the proton carries a large fraction of the proton energy is very small, but not zero. So on rare occasions, two such high energy constituents will collide in ATLAS with several TeV combined energy, allowing new heavy particles to be produced. Higher luminosity makes such rare processes more frequent, extending the mass reach of ATLAS.

Several changes are needed in ATLAS to take full advantage of the LHC upgrades. Phase-I occurs before radiation damage has become significant, so all ATLAS detectors should still be working well. However, the innermost ATLAS detector, the pixel B-layer which sits 50 mm from the beam axis, will become inefficient as the particle rate increases beyond its design range. It will also suffer radiation damage before the first replacement opportunity, which will be about 2018 in preparation for sLHC. It is very difficult to remove and replace, and should still be working at some level. So we have decided to leave it in place, and insert a new B-layer in 2014 in the gap between the beam-pipe and the current B-layer – currently only 9 mm wide. Discussions are under way to agree on a smaller beam-pipe to increase this gap. Various other changes are under investigation for Phase-I, including new muon chambers to replace the cathode strip chambers in the forward-most region of the muon spectrometer; and beefing up the trigger and data acquisition system.

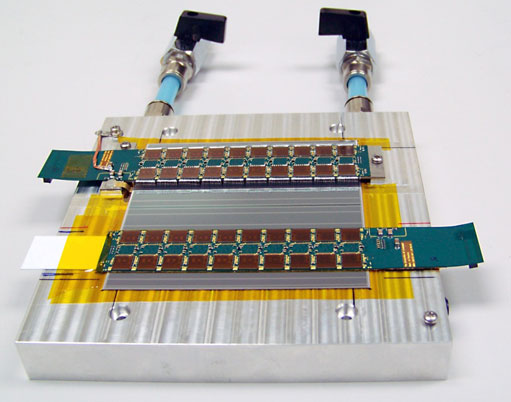

Much more is required for the sLHC. The current inner detector will be radiation damaged, and will not be able to cope with the higher track rate. It will be completely replaced with a new silicon detector. New technologies in silicon sensors and electronics chips will survive the higher radiation dose. By making the active elements smaller, the hit rate in a particular element will remain low allowing tracks to be found with a good success rate. The pixel part nearest the beam will be extended to larger radius than now; the strips region will use shorter strips – 25 mm compared to 120 mm now; and the transition radiation straws will be replaced by silicon strips 100 mm long. Development has been under way for several years now, and recently prototype sensors and readout chips have been delivered and are under test.

The liquid argon and tile calorimeter detectors will continue to perform well at the sLHC. However they need new readout electronics to cope with the higher radiation dose and also to allow all data to be sent off-detector to electronics racks, allowing the trigger to access the full calorimeter granularity. Another worry is the forward most part of the argon calorimeter, the so-called FCAL. The high particle flux here will deposit several hundred Watts of power, leading to many problems. Investigations are under way at a test-beam in Protvino into whether this might even cause the argon to boil. Two solutions are under investigation: one is to replace the FCAL with a new design, which is difficult: it is sealed in a welded cryostat and the replacement would take up most of the 18 months shut-down planned for the upgrade. The other possible solution is to insert a mini-calorimeter in front, shielding the FCAL from about half of the power deposit.

Several muon chambers in the forward region will be overwhelmed at the sLHC. New chamber types are under investigation as replacements. Some of these will combine trigger and precision measurement, saving space which can be used for shielding and so reducing the hit rates in other chambers.

The trigger will also need considerable enhancements. The basic idea is to keep the trigger accept rates the same as now at each level. This means rejecting ten times more events at level-1, and handling roughly ten times more data at all levels. Several ideas are under investigation.

All this has to be ready for installation in 2018, when we anticipate a long shut-down of about 18 months. Work has been going on for several years now, at an increasing pace as the current ATLAS was completed freeing up effort for the upgrade. The work is brought together in upgrade meetings, with all systems having workshops in 2008. In 2009 we have started holding ATLAS Upgrade Weeks, with all systems participating together. In February, over 200 people joined the first one at CERN. They presented progress reports, held technical discussions and planned for the future. It is not possible to summarise all the work here, but one humble picture stood out: the first prototype strip module, using the newly developed sensors, readout chips and circuit board. And great news: it worked, first try!

Nigel HesseyNIKHEF

|