ATLAS e-News

23 February 2011

On the spot

27 April 2010

Location of the beamspot

It’s almost inconceivable now to think of ATLAS data-taking without an online beam spot measurement. But just a couple of years ago, the prospect of mapping the overlapping region where the beams collide and the physics begins in real time was but a glimmer in ATLAS’s eye.

Although the LHC has ‘beam instrumentation’ monitoring the size and position of the beams at thousands of sites around the ring, the operators have no direct means of getting information from the experimental collision points – arguably the locations where knowing the beam’s characteristics is most critical for the experiments.

“There’s some 40-odd metres where they’re blind, because our detector is in the way,” says grad student David Miller. “But in that sense we have a really good, really expensive beam position monitor: our Inner Detector.”

David began looking at the beam spot as a “side project” two years ago, under SLAC supervisors Su Dong and Rainer Bartoldus, but it soon developed into much more. “It’s become too popular is the problem!” he laughs. The online reconstruction which he works on uses dedicated super-fast tracking algorithms and vertex fitting software to reveal a three-dimensional picture of where the luminous region – the tilted ellipsoid volume “where everything is colliding” – is in space.

The online reconstruction of the beam spot employs high-speed algorithms built into the Level 2 Trigger, to make use of as much input data as possible, as quickly as possible. In addition, the offline beam spot reconstruction is done at the Tier 0 processing stage, when the number of events to be saved has been whittled down. Since speed is less constrained offline, more precise tracking algorithms are used there to carefully reconstruct the collision vertices for physics analysis.

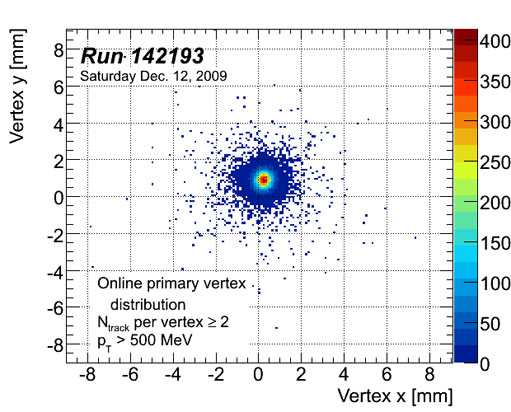

From the Trigger desk in the ATLAS Control Room, shifters can see real-time online plots of the X, Y, and Z positions of the beam as a function of time, and a scatter plot in the XY plane showing what the beam looks like head-on in ATLAS.

“Some measurements, like the tilt of the beam, we spend a bit more time figuring out because we need data from along the entire Z-axis,” says David, “but for the basic distributions – the X, Y, and Z – we get very good determinations every two minutes.”

Beam drifting in the transverse (XY) plane is an important factor in the determination of beam lifetime, since it is linked to how many protons fall out of the bunch as a function of time. Knowing how much the beam has spread out in XY with time is important for understanding the luminosity. And bunch lengthening or drifting in the Z-direction is important for knowing the collision point accurately: “It’s a known effect,” says David, “but the absolute value of the change is important information for the accelerator physicists.”

Indeed, keeping tabs on the location and quality of the beam, in order to relay the information back to the LHC operators, is half the purpose of the online beam spot determination.

“Certainly for the first data, it was really cool because there was a lot of feedback back-and-forth at that level, and the LHC operations guys were really interested in what we were doing.”

Feeding up-to-date beam size and position information back into the ATLAS High Level Trigger is the other key goal of the online beam spot determination, in particular for Level 2 b-tagging processes, which are only efficient if they know where the tracks should be coming from: “If you’re ignorant of the fact that the beam has moved when you’re deciding which events are interesting, you could make a mistake and either throw away interesting stuff or keep boring stuff,” explains David, adding: “It’s an incredible technological feat that we’re able to redistribute information determined online back to the same processors making that determination.”

The obvious question here is – what if you’ve got your calculation wrong and are simply propagating an error? If your determination of beam location is flawed, plugging this information back into the algorithms that got it wrong in the first place is useless.

“Which is why we write the result both to the database and to the Calibration Stream,” assures David, referring to the offline measurement and the fraction of events that are written out for calibration, so that they can be checked for coherence and consistency.

In the months before collisions, the beam spot determination processes were prodded and poked with simulated data to see how they performed under different circumstances, such as purposely shifting the beam position away from the nominal XYZ = (0, 0, 0). “At first, we noticed that some of the algorithms had implicit selections inside that were preventing us from seeing an offset beam,” says David. “So those were fixed ahead of time. They were only small changes, but it’s something that would’ve been easy to miss without those tests.”

Now that collisions are in full swing, real testing is possible and getting the ‘Level 2 feedback mechanism’ in place has become the main focus.

“We’ve been busy checking the results that we’ve been getting so far, before we feed them back. We’re holding off and really putting a lot of effort into making sure that the distributions we’ve reconstructed are not biased, comparing them to the offline measurements which are more precise, and making sure that the answers we get are consistent.”

The basic infrastructure for the feedback mechanism is in place and what remains to be done is to fully road-test the thing in online tests. Tests at 7 TeV are underway.

Ceri PerkinsATLAS e-News

|