ATLAS e-News

23 February 2011

Projecting Higgs limits

1 November 2010

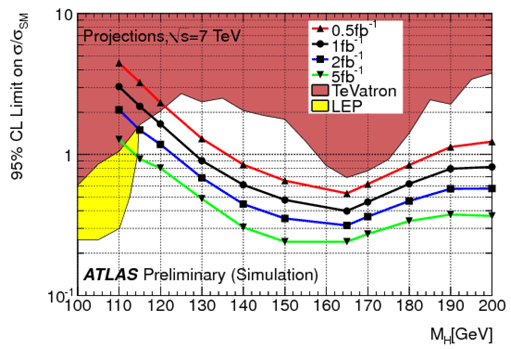

Plot showing the effect on the 95% exclusion limits of increasing the integrated luminosity from 0.5 inverse femtobarns to 5 inverse femtobarns at 7 TeV. For example, one could exclude a Standard Model Higgs with a mass lying between 135 and 188 GeV with only 0.5 fb-1 but this would nearly extend from the LEP limit of 114 GeV to several hundreds GeV with 5 fb-1 of total integrated luminosity for ATLAS alone.

It has been a good year for the LHC. Since the start of 7 TeV running, regular multiple-fold increases in integrated luminosity have become the norm and last month the machine hit, and then quickly doubled, its 2010 peak instantaneous luminosity goal of 1032 cm-2 s-1 . All these successes have got the ambitious wondering, though… 'What if we were to raise the energy for the 2011 run?'

The LHC's aim for 2011 is simple: To deliver one inverse femtobarn of data to the experiments before the year is out. But a surprise announcement from the Tevatron in July, excluding the existence of a Higgs with a mass of 110 GeV or below, got people at CERN asking questions.

“[The Tevatron] hopes to have enough evidence by the end of next year to make an exclusion, though not a discovery, from 110 GeV to 180-ish. Which is the region where everybody now assumes the Standard Model Higgs must be,” says ATLAS Higgs convenor Bill Murray.

With the Tevatron expecting to be able to “exclude the Standard Model” by the end of next year, the LHCC “started to get interested in this discussion again, and asked us to go back and have another look at what we could really do at these points”.

In 2008, the 'CSC notes' document, which brought together two intense years of high-precision advanced studies of the ATLAS physics potential, concluded that a Higgs with a mass over 130 GeV should be easy to spot. Anything below that mass, it said, would require a very large dataset before a clear discovery could be made.

But, says Bill, ATLAS's recent Higgs sensitivity studies left out an analysis of Higgs decaying to b-quarks, which is precisely the channel which the Tevatron's new low-mass Higgs exclusions are based on. “We'd left it out for good reasons,” he explains. “It was difficult, it was hard to do, and we were looking at cleaner decay modes. But in the meantime people have come along with clever ideas and found ways that we can actually use this channel.”

In a new study, Bill and a team of experts build on their preliminary Higgs sensitivity study from this summer, which took three of the most powerful Higgs decay modes and scaled down energy values from previous notes, to get an approximation of how sensitive ATLAS might be to Higgs particles at 7 TeV. The new study augments this by scaling a further two channels down (the aforementioned b-quark channel – thanks to Standard Model co-convenor Jon Butterworth's team – and a tau decay channel) and adding two completely new channels accounting for Higgs decay via Z bosons.

Taking into account theorists' latest Higgs production rate estimates, these seven decay modes were tuned for energy scenarios of 7, 8, and 9 TeV to try to establish whether the possible scientific gains merit the time lost setting up the machine for higher energy running. As well as expanding the Higgs sensitivity range, running at higher energy naturally squeezes the beams, resulting in increased data rates.

“It was a clear operational question,” says Bill; one that the new report produces an answer to: “Basically we need three quarters as much data at 8 TeV as we need at 7 TeV [to achieve the same sensitivity in a Higgs search]. And if it takes two months or so to set up, depending on how long the run is, we're probably just about breaking even. Maybe winning a little bit.”

The report states that one inverse femtobarn of data at 7 TeV should make ATLAS sensitive to Higgs bosons with mass in the range 129 to 460 GeV. Two inverse femtobarns at 8 GeV would extend the reach in both directions, giving a sensitive region of 114 to over 500 GeV. And, although not discussed at length, 9 GeV would raise the upper boundary to somewhere around 600 GeV.

The focus, though, is on the lower region. “That's where we expect the [Standard Model] Higgs to be, but we've built this machine because we don't know what the physics is. We're trying to find out what's there,” says Bill. Covering the range down to 114 GeV would also mean picking up where LEP data left off, so that CERN-derived results (with a few points of decreased sensitivity) would then span the whole range from 0 to 600 GeV.

Of course, although the ATLAS detector is technically ready to take data at increased energy, it would mean a lot of work in parallel with data-taking to re-calibrate backgrounds. And, independent of the experiments' concerns, the LHC must assess the greater operating risks that an energy shift might bring.

The question of what energy to run at in 2011 has also become intertwined with a discussion about whether or not to extend the run into 2012; if there's a two-year run on the cards, it makes a lot more sense to spend two months setting up for higher energy before that starts. “I think everybody is now pushing towards the idea that we would continue to run in 2012, particularly if we get higher data rates than currently,” considers Bill.

The run length, energy, and luminosity evolution strategy will all be thrashed out at the Chamonix LHC meeting in January, but Bill is already sure of one thing. As he told the ATLAS Weekly meeting last week: “2011 will be the year of the Higgs.”

Ceri PerkinsATLAS e-News

|