ATLAS e-News

23 February 2011

LumiBlock and LumiCalc services for ATLAS users

9 February 2010

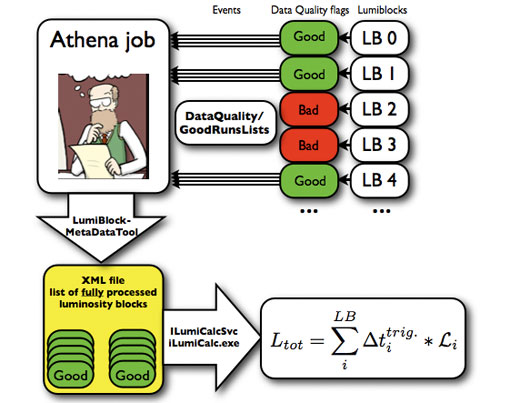

A simplified view of the ATLAS distributed analysis system.

With the new era of collisions at the LHC, ATLAS users need tools to integrate the corresponding luminosity of

the analysed data in order to make physics measurements.

Luminosity itself at ATLAS will be measured and monitored in several ways. There are absolute and relative

luminosity measurements. One type of absolute measurement uses the beam parameters to determine luminosity

(e.g. van der Meer scans of the beam). Other absolute measurements

make use of the optical theorem (the total rate of proton-proton interactions is related to the rate of forward

elastic scattering, Coulomb scattering, etc.; Roman pots will be used once the ALFA system is installed).

The relative measurements are typically counting and/or coincidence experiments located at large angles

(scintillators, high voltage current monitoring in calorimeters, LUCID). Physics processes with high rate

(e.g. W and Z production) can also be used to make luminosity measurements. The precision of these measurements for

the first run is expected to be strongly varying. The resulting luminosity values will be uploaded

to the conditions database and ultimately the physics users will be able to choose between the different methods.

To better understand the underlying concept behind the software tools used for integrating the luminosity,

one needs to consider the luminosity block paradigm of ATLAS. The atomic unit of ATLAS data is the Luminosity

Block (LB). One LB contains roughly 2 minutes of data taking, but this can vary due to run conditions and other

operational issues. The concept of treating the data in small chunks of time is not new and makes a lot of sense:

it makes it possible to select data based on the quality and conditions of the beam and detector performance, so the average luminosity is stored per LB. The trigger system of ATLAS provides timing information for the

beginning and ending time of each LB. This information is recorded

in databases which the users can query by time or LB number.

Integrating luminosity over a fraction of data simply means to get the list of the corresponding luminosity

blocks and, for each of them, to query the database for the information, then sum up the values using the

simplified 'discretized integral formula':

where Ltot is the total integrated luminosity, i in the sum iterates through the selected list of

LBs,

Δti is the time length of the ith LB of a given trigger, and finally Li is the

average instantaneous luminosity of the ith LB in the data.

So far so good; if all the information is provided, summing up the luminosity can be done. But there is an

extremely important task that needs to be done before any such integration: the users need a tool which

book-keeps exactly which luminosity blocks have been processed in a job, and stores this information

using an established file format. Why is this so important? Because, in today's Computing Grid era, analysis

jobs are running on a variety of computing sites and the data is distributed across the world. Thus, if the

book-keeping is not handled correctly and a few luminosity blocks are missing on a particular computing

site, a systematic error would be introduced into the denominator of a cross section formula. Such a mistake

could manifest itself, for example, if the data files were not properly transferred to the site, or if events

in a luminosity block had not been fully processed, or any other reason. Also it is

difficult to monitor remote jobs in detail.

A set of classes have been developed to provide the necessary infrastructure for the users to deal with

these issues. The classes have been collected in the LumiBlockComps package. The package supports three main tasks:

- Provide a container to store the information on the collection of luminosity blocks: LumiBlockCollection

- Provide a class which book-keeps the processing of luminosity blocks in an analysis job: LumiBlockMetaDataTool

- Provide an interface class in the ATLAS software framework, Athena, for the luminosity integration: ILumiCalcSvc

- Provide an executable to do the same integration from command line: iLumiCalc.exe

The output of such a LumiBlockMetaDataTool based Athena job is an XML file which stores the list of luminosity

blocks which were actually processed. In the Athena job output the integrated luminosity is reported when using

ILumiCalcSvc in a user analysis job. ILumiCalcSvc has several parameters: trigger chain name(s), luminosity block

collections, etc. But the easiest way to do an integrated luminosity calculation is perhaps via the command line

tool "iLumiCalc.exe", where it is possible to feed in the output of a grid job's XML file and recalculate the integrated

luminosity; that is to cross-check the result of a grid job from the command line. iLumiCalc.exe has a set of command line

parameters: the users can specify the list of lumiblocks in full command line, in XML file format (see Atlas Run

Query) or ROOT file format. Output results are printed on the screen and also saved in several output file formats.

One output file is the XML file format which includes the trigger and can be also reused for another calculation.

Useful plots can be created on demand, and a scale factor can also be used for constant scaling of luminosity.

A few sentences should also be devoted to present the interaction of the luminosity tools with the data quality

flag selection. In a particular analysis, certain data and detector quality conditions are required, based on the

physics process under investigation. In practice, this means that the users need to analyze only those luminosity

blocks for which the experimental detector conditions were deemed good enough to measure the final

state particles with the required precision. Software tools have thus been developed to filter on luminosity blocks

using data quality flags. These data quality selector tools (or so-called good runs lists tools) were developed

in close connection with the luminosity tools and any complete physics analysis must rely on both sets of tools.

To get going with all these tools the users should check the tutorial pages (see below).

Feedback is welcome, and we hope the luminosity processing and calculator tools will be useful for physics analysis.

Luminosity block and calculator tools tutorials:

https://twiki.cern.ch/twiki/bin/view/Atlas/CoolLumiCalc

https://twiki.cern.ch/twiki/bin/view/Atlas/CoolLumiCalcTutorial

Data Quality selector tools and tutorials:

https://twiki.cern.ch/twiki/bin/view/AtlasProtected/GoodRunListsForData

https://twiki.cern.ch/twiki/bin/viewauth/Atlas/GoodRunsListsTutorial

Balint RadicsUniversity of Bonn

|