ATLAS e-News

23 February 2011

Software validation

16 November 2009

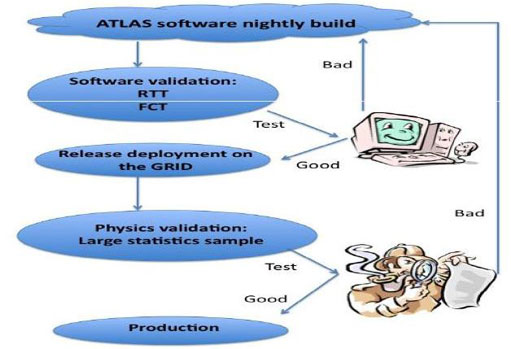

A sketch of the ATLAS simulation software validation chain from Andreu Pacheco’s presention at the Barcelona meeting

The ATLAS software is an ever-evolving creature. In the final weeks before beam, and onwards into full data-taking, the chain of checks and re-checks which ensures that the code runs as it ought to is more important than ever.

Davide Costanzo spends most of his time on software validation, making sure that the software, which is compiled every night and deployed at Tier-0 almost every day is technically stable: “Behind me, there is a group of shifters covering 24 hours a day. We check the software to make sure it doesn’t have any bugs before deploying it. If it crashes after it deploys, we make sure that it gets fixed in a very short period of time.”

Protocol for dealing with problems at Tier-0 depends on the severity of the glitch. “If it affects only one per cent of the jobs, then you just carry on and accept the possibility that one or two jobs will fail,” says Davide. But if a significantly higher proportion of jobs looks set to fail, action is taken on the spot, either by disabling the algorithms associated with the problem system, or – if a fix is on the horizon – stopping running altogether until that fix is put into the code, resulting in a delay in processing the data.

“For something relatively important, we normally manage to fix it in less than 24 hours, and I’m sure it’s going to be even better once we have trained to do it,” says Davide. He expects the Tier-0 to be able to cope with up to 24-hours delay during data taking, a situation which it will handle by catching up during moments when there is no beam.

At the end of the release building cycle, Iacopo Vivarelli looks at reconstruction run-times and at which, if any, parts of the software failed. He also queries whether the software is actually doing what it’s supposed to do; whether developers’ improvements to the code have been fruitful. “We try to compare the performance of the software in terms of the output – how good the reconstruction is and how fast it goes – with the results of previous releases. We look for electron efficiencies, muon efficiencies, jet energy reconstruction, jet resolution… things like that,” he explains.

Extracting these figures requires high statistics, so a sample of around 500,000 events is simulated, encompassing every possible event scenario that is important to ATLAS analyses. This takes some time, even with the Grid, so the exercise is run once every two weeks rather than nightly. Experts from each of the ATLAS physics and performance groups make plots from the output files and feed the findings of their comparisons back to Iacopo.

While the reconstruction and analysis of simulated data is being honed, an analogous job is of course being done with real data. For the moment, this is entirely made up of cosmics. Since the nightly runs can process at most 1000 events over a few hours – equivalent to just 10 seconds or so of collision data – ‘Big Chain Tests’ are run on a monthly basis. During these, several million events are run over a few hours, to stress-test the system and make sure that it can run smoothly at high data rate on these sorts of timescales.

Peter Onyisi discusses how the data quality software can be tested as well: “The monitoring code runs as part of [the nightly] test, so we can see if the correct histograms are getting produced and displayed. Conversely we can use these histograms to monitor changes in the software, since as the test is run over a fixed sample of events, any changes in the output indicate alterations in the reconstruction that should be understood,” he explains.

“Of course, physics validation should also validate real data,” says Reconstruction Integration Group co-ordinator, David Rousseau. “The simulation and reconstruction code is 90 per cent common, but until now, a gap between them has been natural, as we only had cosmic real data.”

Soon, the process of reducing that gap will begin, agrees Davide: “When we start taking collision data, people’s interest will move towards [that]. The physics validation effort, which is now traditionally centred on simulated data, and the data quality effort, which is centred on reconstructed data, will at some point have to merge to focus on real data.”

Awareness of this necessary shift has been a factor in some of the alterations made to the code over the last year. “We’ve been trying to put in the software things that are not supposed to be too much dependent on the differences between the simulation and the real data,” Iacopo says. In some cases, a gain in robustness against possible differences in between the simulation and real data has come as a result of deliberately degrading performances. “What is an improvement is difficult to say,” he considers, adding: “but my feeling is that the software is basically ready for the reconstruction.”

Ahead of beam, tests are underway to explore what happens if parts of the detector are suddenly switched off. “We want to be able to reconstruct data in many different configurations. The code should be flexible enough to allow for this,” says David Rousseau.

As for what will happen once ATLAS sees beam, well that is still partially under discussion. Right now, the mechanics of the code workings – making sure that it runs smoothly – are currently more heavily tested than the actual functionality of the code – making sure that it does what it’s supposed to. A key aim, then, for the next few weeks will be to make looking at the outcomes of simulated data more automatic.

“We are discussing about increasing the number of machines that we use every night to test, so that we will be able to move our two week cycle to a nightly basis, but with smaller samples of data – 100,000 events or less,” says Iacopo. “We would probably evolve towards the model of having shifters looking at that.”

From Davide’s perspective, things ought to calm down a little as problems are ironed out over the next few weeks, and new software releases will become a weekly occurrence rather than a daily one. But the lull will be temporary: “Eventually, again, there will be another lot of releases coming out when we start taking collision data, because there will be new [as yet unknown] problems coming up.”

Ceri PerkinsATLAS e-News

|