ATLAS e-News

23 February 2011

How data flow from Point1 to analysis sites

25 February 2008

Lots of things go into the ATLAS detector: electricity and many types of fluids flow into it, proton beams circulate through it, and a lot of intellectual power is injected into it! What we get out from Point1 is a precious stream of information from which we hope to distil our future understanding of particles and forces. In a materialistic view, each Watt into LHC and ATLAS yields about one Byte/sec of raw data flowing out of Point1.

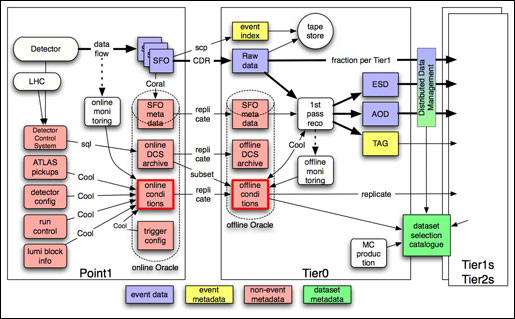

What does this stream of data look like? It is composed not only of event data, but also of other information (metadata) like status from all sub-detectors, the LHC and the DAQ system: all are required to interpret the event data. The figure below gives an overview of data and metadata flowing first from Point1 to Tier0 and then further to Tier1/2s and to the analysis sites.

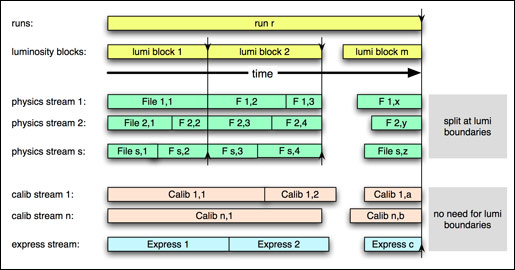

Here we concentrate on the (blue) event data stream. This is a composite stream as it carries not only physics events meant for analysis, but also partial event data for detector calibration, and a selection of events for express monitoring of data quality, and other more technical data streams which can capture rare error situations.

The physics events are subdivided further into streams according to classes of trigger signatures, e.g. electron/photon, muons/B-physics, jets/taus/missing energy, minimum bias. An event can have more than one trigger signature, and then it is flowing in several streams. The decision for this "inclusive" streaming (as opposed to "exclusive" where multi-signature events go to a separate overlap stream) was taken in Autumn 2007 as the final act of the work of the Data Streaming Study Group which also settled the feasibility of having more than one raw physics data stream, doing quantitave studies of the multi-signature overlaps to be expected.

Data streams don’t flow continuously, but they are quantized into so-called luminosity blocks – typically one minute long during which conditions like instantaneous luminosity and detector status are stable. The event filter (3rd level of the ATLAS trigger) subfarm outputs act as slices and form data files per luminosity block and stream, as shown in the picture below. On the hardware level of course, data flow again serially in the end via optical fibres,

like from Point1 to Tier0.

Streaming continues into the results from the subsequent data processing steps. Event Summary Data (ESD) form the same streams as the raw data do, and Analysis Object Data can subdivide these streams further as required, e.g. by separating muons and B-physics. It is important to note that the global overview of all events can be maintained with the event tags, a tiny (1 Kbyte) subset of the event data carrying the most important event classification information, e.g. the trigger decision, together with the storage location of the event.

The main motivation for having more than one raw physics data stream is flexibility: they allow us to process just part of our huge data volume with priority if required. But flexibility is also required in the definition of the streams which we may have to change in case triggers are redefined, e.g. as a consequence of initial beam conditions. This is why the streams are defined in terms of trigger signatures together with the trigger menus themselves, in the trigger configuration database. The contents of this database is part of the metadata flow – which brings us back to the gross picture.

More details on data streaming can be found in the report of the study group on the ATLAS Twiki DataStreamingReport.

Hans von der Schmitt

Max-Planck-Institut fuer Physik